Nowadays, more and more young people are buying chat services on e-commerce platforms to accompany them virtually and confiding in “chat teachers” to communicate and express their feelings. Prices for various degrees of companionship range from tens of yuan to the customized "virtual lover" for thousands of yuan. In recent years, virtual companionship services have become a fashionable self-healing way for young people to seek spiritual comfort and express their voices on the Internet. There are many stores on Taobao that provide this service, such as "gentle and cute little sweetheart", "overbearing dictatorial president fan", as long as you pay, you can find your favorite "character".

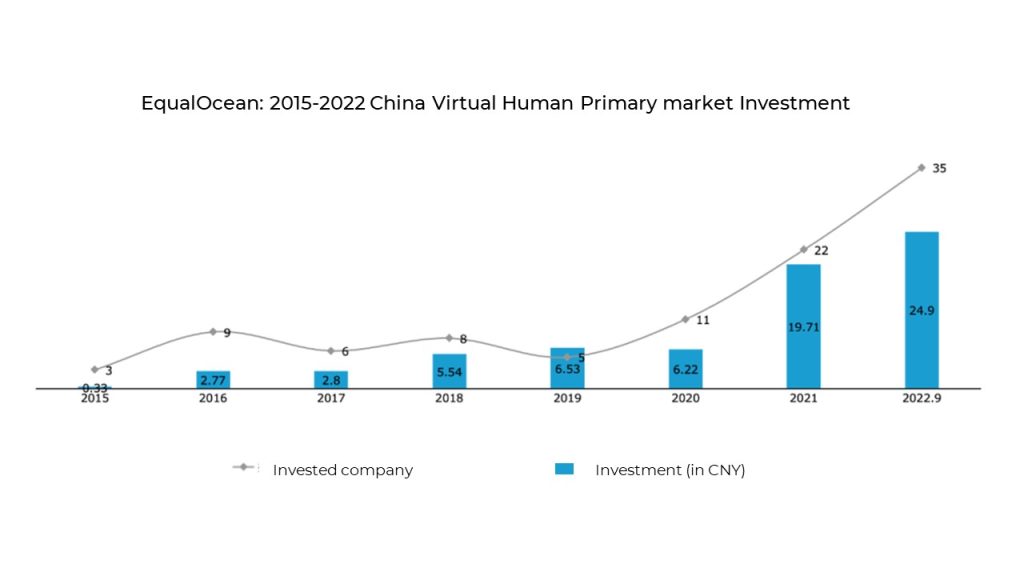

The momentum of virtual human development is like mushrooms after a rain. According to the EqualOcean, a consultancy, as of September 2022, the investment and financing amount of China’s virtual digital human track has exceeded last year, reaching 2.49 billion yuan. In 2015, this figure was only 33 million yuan, with a compound annual growth rate of 97.71%. With such a huge market share, what makes virtual humans so fascinating?

Market Demand

The world given by the virtual character is futuristic and borderless, a technological artistic vision full of bizarre "images with nothing to see". People can establish a good interactive relationship with virtual people, and the love between virtual people is mutual and equal, and new imaginations are generated through interaction with each other. People complete the constant transition between themselves as spectators and themselves in virtual characters. So how is the powerful interactive ability of virtual human realized?

Interaction Ability

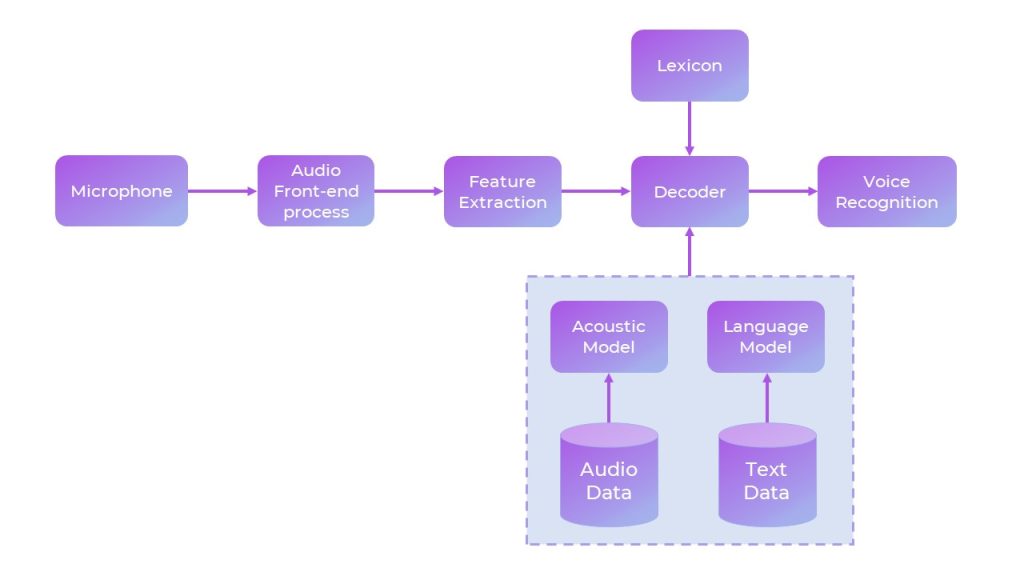

The interaction between virtual human and human needs to be understood and generated through text, voice, and vision, combined with action recognition and driving, environmental perception and other methods. Multimodal human-computer interaction can fully simulate the interaction between humans. Among them, speech recognition and speech synthesis are the core functions of virtual human interaction. A simple definition of speech recognition is the technology that enables computers to recognize, understand, and translate human speech into text. Speech recognition technology uses natural language processing or NLP and machine learning to translate human speech. The speech recognition flow chart of virtual human is as follows:

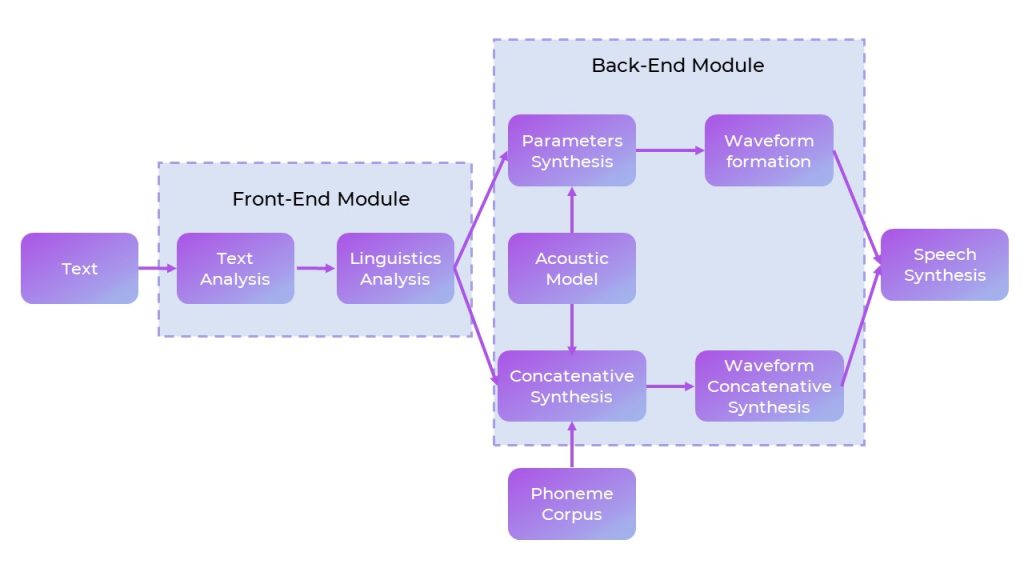

The charming voice of the virtual human comes from the synthesis of the voice of the voice actors, and the speech synthesis is the artificial way of generating the human voice. If a computer system is used in speech synthesis, it is called a speech synthesizer, and a speech synthesizer can be implemented by software/hardware. Text-To-Speech (TTS). Its process is as follows:

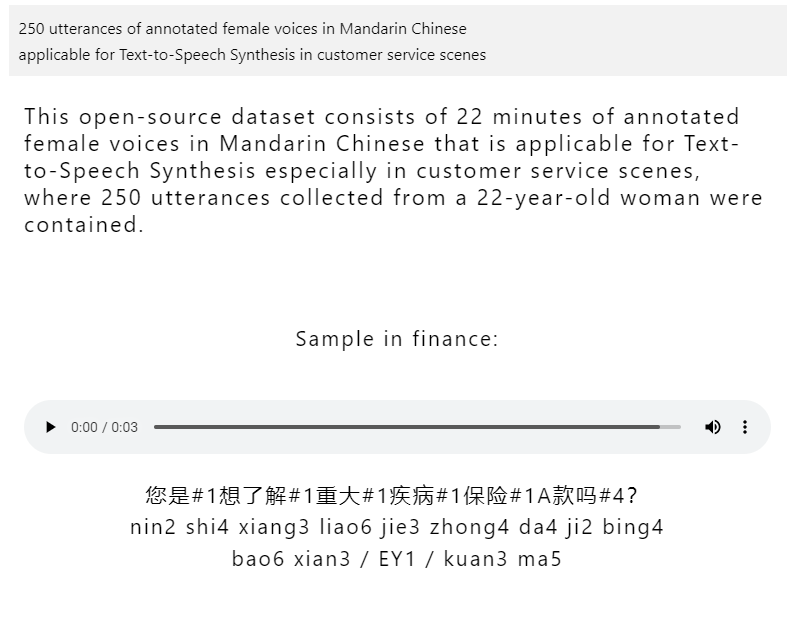

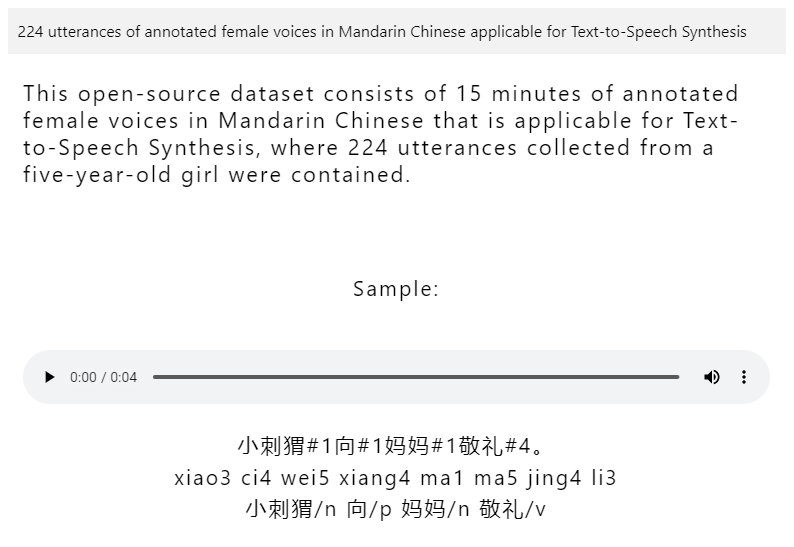

Whether it is speech synthesis or speech recognition algorithms for virtual humans, a large number of high-quality precise corpora are required for training. The quality and quantity of data often determines the degree of optimization of deep learning algorithms. The larger the amount of data, the more accurate the labeling, and the smarter the trained virtual human will be. Communication and interaction with people will be smoother, and the synthesized speech will be more anthropomorphic. Data is the cornerstone of all deep learning tasks. Since researchers need to focus most of their energy on developing new algorithms and models, data collection requires the assistance of professional data companies. Magic Data is a professional AI data solutions provider with a large amount of ASR and TTS data. We provide various Speech recognition corpus of various languages and scenes. At the same time, it also has a large number of accurately annotated TTS corpora, examples of which are as follows:

TTS-SCCUSSERFSC: A Scripted Chinese Customer Service Female Speech Corpus

TTS-SCFCHILSC: A Scripted Chinese Female Child’s Speech Corpus

For more information, contact business@magicdatatech.com.